We decided to try something new…

Instead of your standard “How-To” content, we thought we would up the stakes. Let people submit a problem via a locked “Vault” and when we flip the camera on, it unlocks and we get to solving it.

The problem submitted was:

I lack a reliable, low-friction system that captures and regularly resurfaces each client’s long-term personal context and last-session commitments before calls, so I show up unprepared and risk renewals and family financial stability.

(Note: This was the output of using the 5 Why’s Prompt to Drill help clarify the real proble and why it sounds like AI)

As I read it out loud, every instinct inside of me is screaming the same thing…

Start. Building. Now.

AI is incredible. With a few taps of the keys and hitting enter a few times, you can send it off to do your bidding and it will return an answer that sounds like a senior level consultant worked on it for weeks.

But just because it sounds good doesn’t mean it is good.

So instead I tell my instincts to chill for a second and instead, say: “To me, it’s still not drilled down enough.”

I remove myself from “answer now” mode and notice something I’d never put into words before.

The Layers Underneath

I ask Claude to unbundle the problem from first principles.

What is this person actually trying to accomplish?

Claude comes back with layers…lots of them:

”The surface-level framing is about a missing system - specifically one that captures and resurfaces client context before calls. But there are several layers underneath worth examining.

The Information Problem

There are actually two distinct information challenges bundled together here:

Long-term personal context - This is the accumulated understanding of who this client is, what matters to them, their history, their patterns. It’s the kind of knowledge that builds over months or years of relationship.

Last-session commitments - This is much more tactical. What did we agree they’d do? What did I promise to follow up on? This is accountability tracking.

These require very different capture mechanisms. One is ongoing synthesis, the other is discrete action items.

The Timing Problem

The statement says “before calls” - which raises the question: how far before? Five minutes? The night before? The morning of?

This matters because it determines whether you need a push system (information comes to you) or a pull system (you go get it when you remember to).

The Friction Problem

“Low-friction” appears twice conceptually - in capture AND retrieval. But these often conflict. The more structured and thorough your capture, the more useful your retrieval... but the higher the capture friction.

The Stakes Framing

Here’s where I want to push a bit. The problem escalates quickly from “unprepared” → “risk renewals” → “family financial stability.” That’s a big leap.

Some questions this raises:

Is “showing up unprepared” actually why renewals are at risk? Or is that an assumption? Could renewals be at risk for other reasons that preparation wouldn’t solve?

Is this problem actually happening right now, or is this a fear about what could happen?”

Level 4

The old me would have started building immediately. Hit enter. Watched Claude spin up something impressive.

Shipped it.

Felt productive.

Discovered three days later it solved nothing.

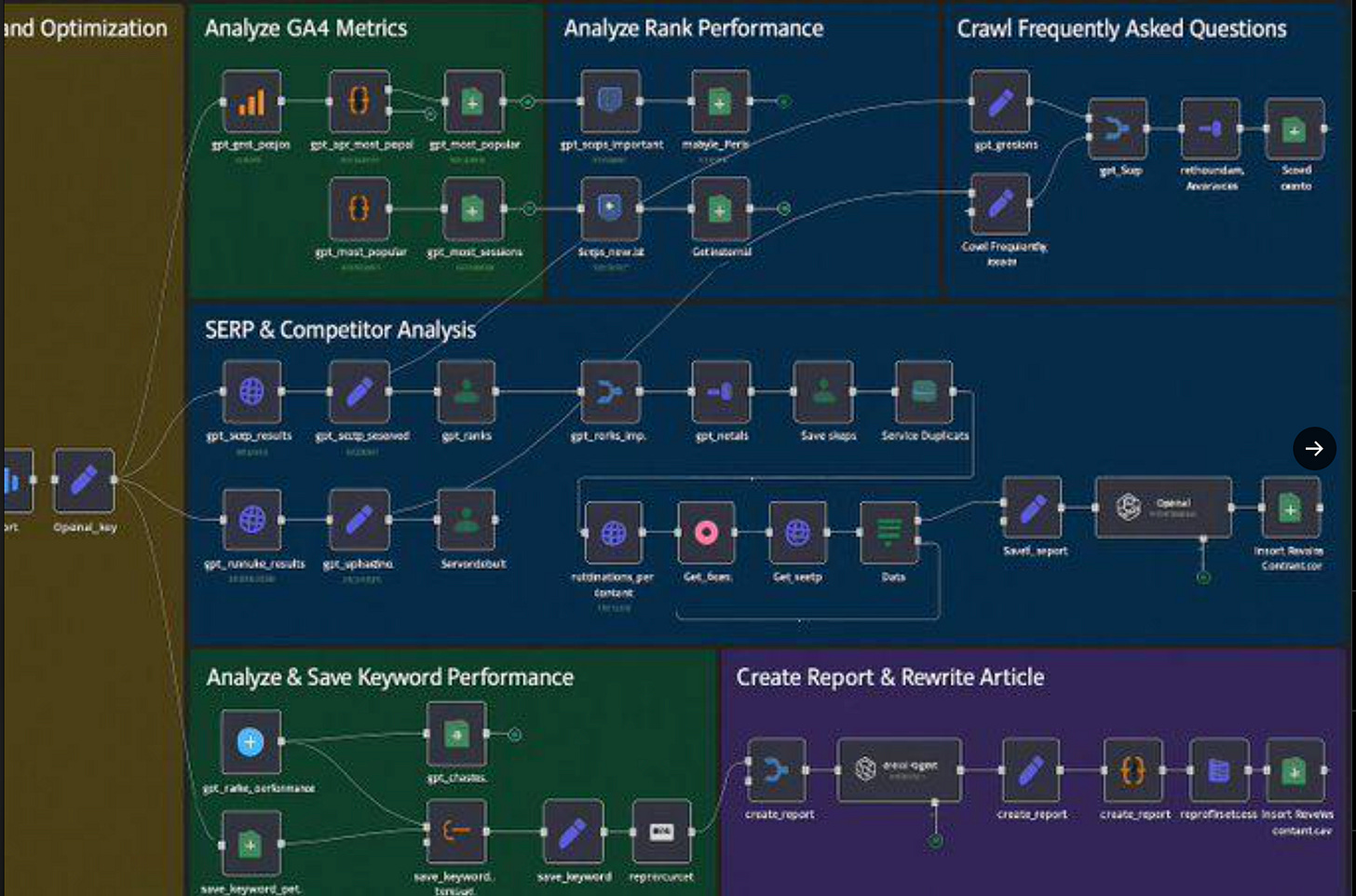

I’ve been burned by that before (be honest, we all have). Hours on workflow looks impressive but just like those n8n workflows your see people promoting on the socials, don’t actually produce anything meaningful.

Quick aside - you mean to tell me you created a workflow that has saved you 100 hours a week and made you 3M+ in profit and you are just going to give it to a stranger on X or LinkedIn because they retweeted it? Social media…

Takes a deep breath, returns to article…

And that’s when I said it out loud:

“It’s almost like you’re trying to solve from Level 4 when Level 1 hasn’t even been discovered yet.”

The problem people bring you is almost never the actual problem.

It’s a symptom bundled with assumptions, wrapped in a solution-shaped request.

AI makes solving feel free. But solving the wrong problem costs you time rebuilding.

Costs you trust when the solution fails.

Costs you the insight you would have found if you’d drilled first.

The better AI gets, the more expensive this mistake becomes. You can build the wrong thing faster and more impressively than ever.

Doctors. Ducks. Blueprints.

Doctors call it differential diagnosis.

Patient comes in with chest pain. A bad doctor treats the symptom.

A good doctor creates a list of every condition that could cause chest pain.

Heart attack

Acid reflux

Pulmonary embolism

Anxiety

Burrito from Tuesday

Then systematically rules them out.

If an important potential condition is missed, no method will supply the correct conclusion.

The best treatment in the world won’t help if you’re treating the wrong thing.

Programmers call it rubber duck debugging.

Developer stares at the same six lines of code for forty-five minutes. Finally, they explain it line-by-line to a rubber duck. The act of articulating forces them to examine each assumption. Most of the time, they discover the solution mid-sentence. Not because the duck gives advice. Because the explanation reveals what they’d been taking for granted.

The duck doesn’t solve. The duck forces you to discover Level 1.

Architects won’t draw until they understand how the family actually lives. Their daily routine…time spent in each room. They refuse to build until they’ve drilled past “I want an open floor plan” to “I want to see my kids while I’m cooking.”

It’s the same pattern in a different domain.

The people who are great at building are the ones who refuse to build first.

Your Last Seven Days

Think about your last week with AI.

How many times did you paste something in and hit enter before asking:

What am I actually trying to accomplish?

What would success look like?

What assumptions might be wrong?

If you’re like most people…

Just about every darn time.

This isn’t because you’re lazy. It’s because AI is designed for speed and keeping you engaged. For creating an affinity that makes you want to come back and spend more time on their platform.

You ask, you get.

The dopamine hits before you’ve finished reading the response.

Except the math doesn’t work.

Every half-solved problem boots you back to the beginning. Every misaligned solution demands a do-over. Every impressive-looking output that doesn’t quite fit wastes more time than typing the original prompt.

The thing that makes AI feel fast is the thing that makes your results slow.

After the Drill

After we drilled down on the client prep problem, then we built.

Claude created the Client Prep Engine. A Skill that transforms whatever client inputs exist into pre-call briefs. Transcripts, notes, emails. All processed into something incredibly useful.

But here’s what made it good…

Because we’d drilled first, the Skill knew to ask about timing. Knew to separate long-term context from session commitments. Knew to handle the friction problem explicitly.

None of that would have been there if we’d built from the surface request.

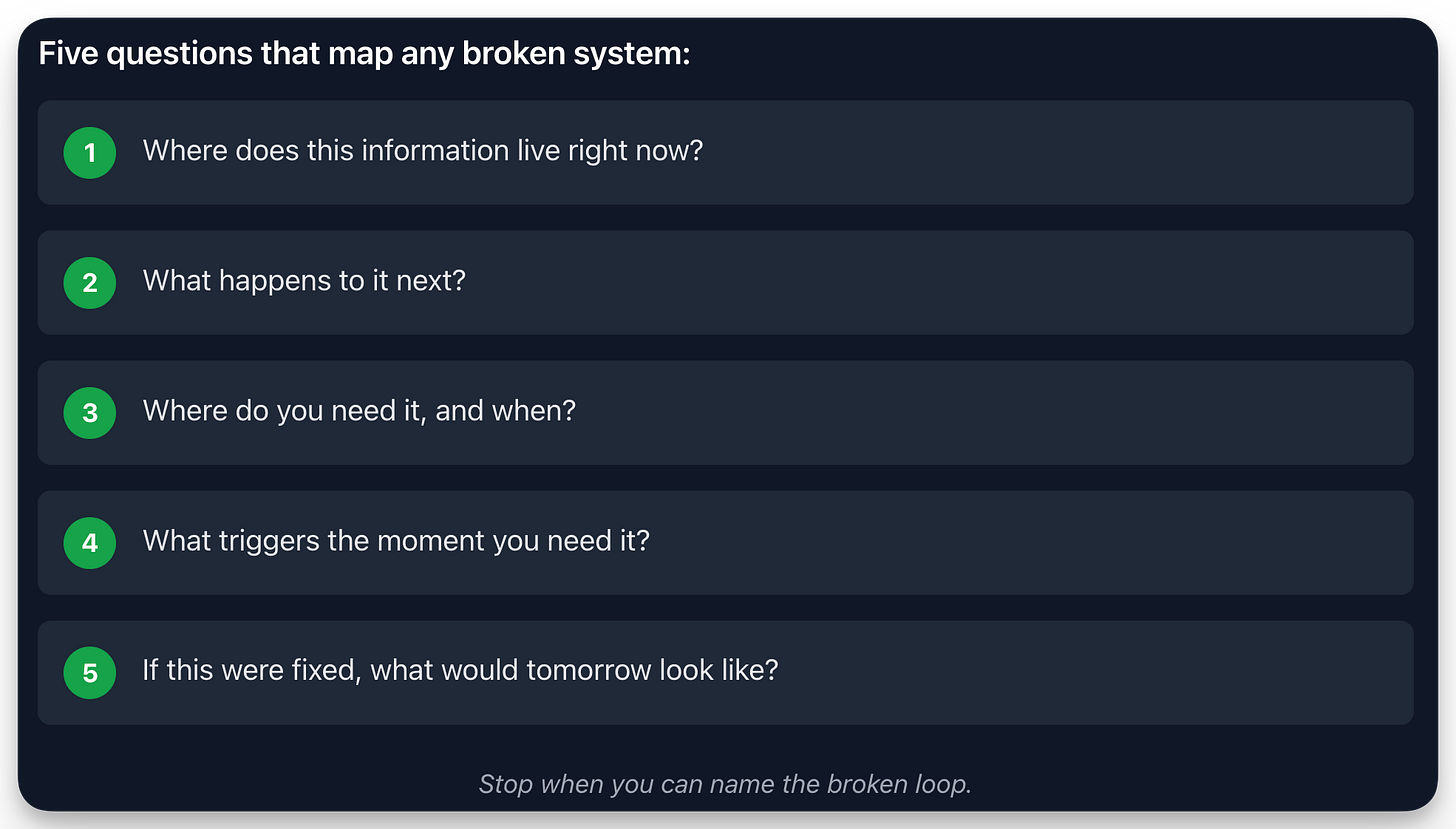

What emerged from the drill-down was a pattern (you know I loveeee my patterns).

These aren’t complicated. But they force you to discover Level 1 before you start solving from Level 4.

You can grab the Client Prep Engine skill.

Or build your own. Let Claude drill before you build.

The old version of you hits enter. The new version asks one question first:

What’s Level 1?

-Max

If you enjoyed this article and this training, we just started a community where our goal is run more experiments and help people think differently about AI.

You can lock in the entry price for life of $27/month and even give it a spin for 7 days to see if ya like it.

Check out Beware The Default Here.