AI Was Supposed to Close the Expertise Gap. Here's What Happened Instead.

Everyone said AI would be the great equalizer.

The playing field would flatten. The little guy would finally have access to the same intellectual horsepower as the big guy. We’d all be geniuses now.

But the gap is widening and three groups are emerging:

1. Domain Experts Using AI

Their output has a different frequency to it. There’s something in the work that you can’t quite name, but you recognize it immediately. They’re pulling ahead of everyone.

2. Domain Experts Not Using AI

They’re doing excellent work, but they can’t match the velocity of group one. It’s like watching someone sprint while you’re power-walking. You’ll get there eventually (and look ridiculous for power-walking). They’ll be three towns over.

3. AI Users Without Domain Expertise

They're getting exposed. They're posting without the nuance that comes from doing the work. When their outputs get pressure tested, they collapse. The first time a client pushes back, it's obvious.

The tool that was supposed to help them is now the thing that gives them away.

Default Has a Smell

Here’s what happened...

AI gives you the average of the internet.

Technically correct, but it’s typically a “best practice” or the default answer. The kind of thing that sounds right in a meeting but doesn’t actually move anything forward.

And default answers have a smell.

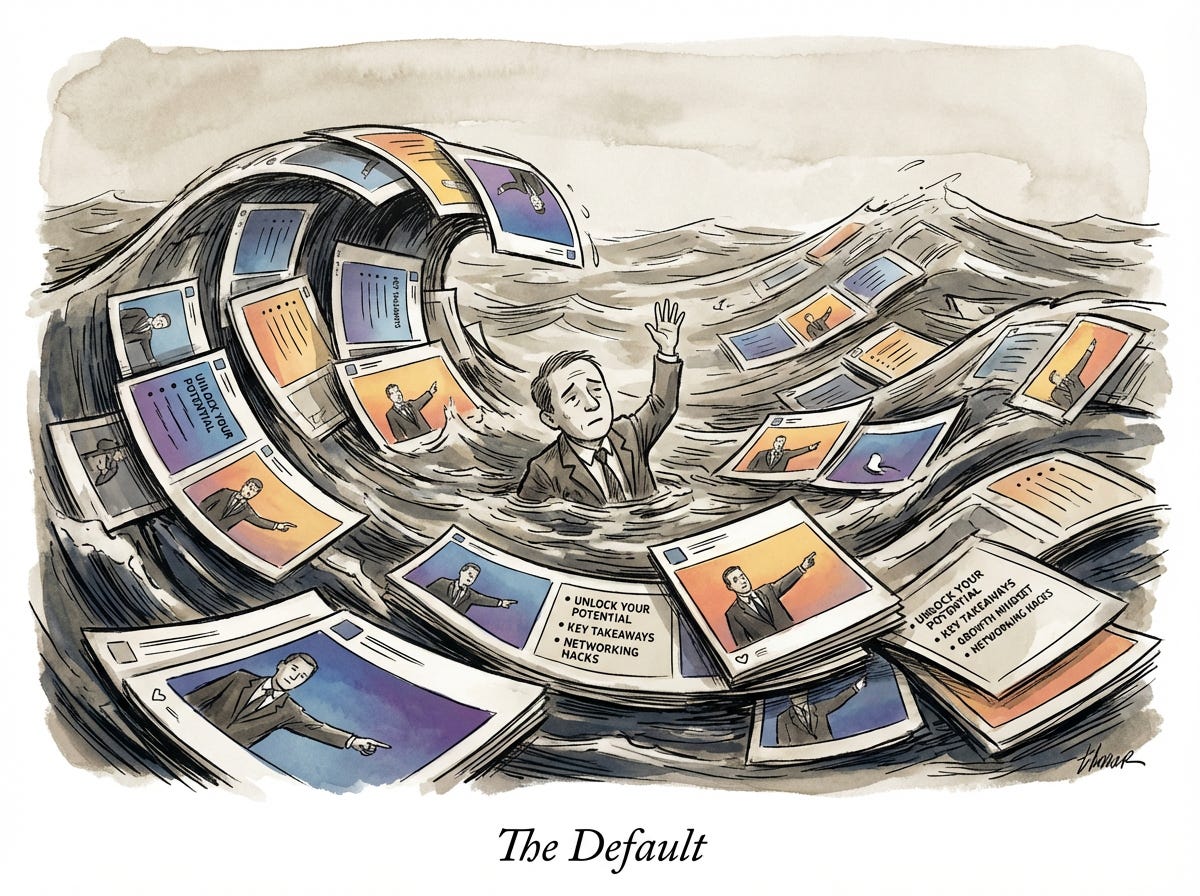

It’s the advice you’d find in a LinkedIn carousel from 2019, complete with the gradient background and the guy pointing at bullet points. It’s not wrong…but indistinguishable from the 47 other posts saying the exact same thing.

Let’s look at some numbers.

The flood is real. Ahrefs analyzed 900,000 new web pages in April 2025 and found that 74% contained AI-generated content. Three out of four new pages. A separate Graphite study found that AI-generated articles now outnumber human-written ones on the open web.

We’re drowning in default.

The novice boost is real. A Stanford and NBER study tracked what happens when workers get access to AI tools. Novices saw a 34% productivity boost. Two months of AI access made them perform like someone with six months of experience.

For some work, that’s a win. If you’re producing templates or first drafts or anything where volume matters more than judgment, the boost is real.

But in knowledge work? Consulting, strategy, anything where clients are paying for your thinking? That 34% boost is a trap. You can produce the output now. You just can’t defend it.

Your nephew who graduated last spring can now produce work that looks, on the surface, like it came from someone with years of experience.

The expert paradox is real.

The same study found the most experienced and highest-skilled workers saw minimal gains. In some cases, quality actually declined when they used AI.

At first glance, that seems backwards.

The issue wasn’t the tool. It was how they used it. Experts who let AI do the thinking lost what made them experts. The ones who used it as a force multiplier - who poured their judgment through it instead of deferring to it - those are the ones pulling ahead.

But what is that “something else” they’re pouring through? What do experts have that doesn’t live in the training data?

The Better You Get, The Less You Can Explain

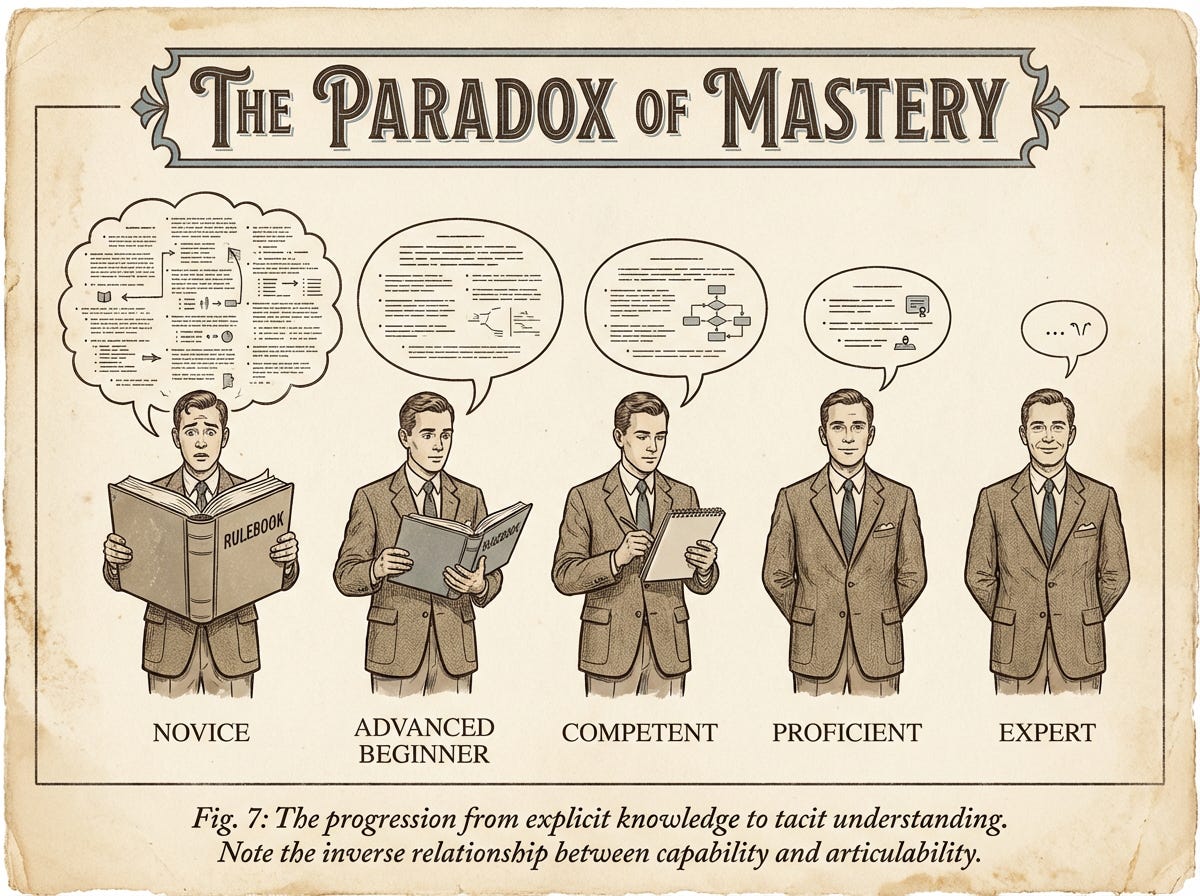

MIT researchers Stuart and Hubert Dreyfus spent a decade studying how people move from novice to expert.

They mapped five stages:

Novice: Follows rigid rules (and panics when the rules don’t apply)

Advanced Beginner: Recognizes patterns (and starts to improvise, badly)

Competent: Makes plans, sets priorities (and takes responsibility for outcomes)

Proficient: Sees situations holistically (and starts trusting their gut)

Expert: Operates from intuition (and can’t explain why)

The critical finding buried in their research was the journey to expertise is a journey away from explainability.

The better you get, the less you can articulate what you’re doing.

Their words: “When things proceed normally, experts don’t solve problems. They do what normally works.”

Novices follow the rules. Experts know when to break them.

This is where domain expertise actually lives, in the micro-decisions.

The “except when...” and “yes but in this case...”

The conditional knowledge that signals this person has been in the trenches, not someone who typed a question into a box and copy-pasted the first thing that came back.

AI can give you the rule.

What it cannot give you are the 50,000 to 100,000 domain-specific chunks that experts have accumulated over years. Patterns compressed into instant recognition that fire before conscious thought even kicks in.

Chess masters see the board before they calculate.

Just watch how Magnus Carlsen competes against 10 other players, simultaneously…blind.

“…but Magnus cannot see the boards he’s facing the other way. So he has to keep track of the positions of 320 pieces blind…I just can’t fathom what you’ve just done. It seems like it’s supernatural.”

Surgeons feel when something’s wrong before the monitors catch it.

Firefighters know which way the fire will move before it moves.

Gary Klein studied these people for decades:

ER doctors

Firefighters

Chess grandmasters

Military commanders

What he found is that experts recognize patterns instead of comparing options. Their first instinct is usually right because they’ve seen this exact situation before, or something close enough, thousands of times.

That recognition is pre-conscious.

It fires before they can explain it.

And AI cannot replicate it because AI is trained on what people wrote down: the explicit knowledge, the stuff that made it onto the page. The 10%.

The other 90% never got documented.

It lives in the nervous system of people who’ve been doing the work. You can’t download it. You can’t prompt your way to it.

You have to earn it the old-fashioned way…

By being confused for years until you’re not.

The 545% Difference Nobody Could See

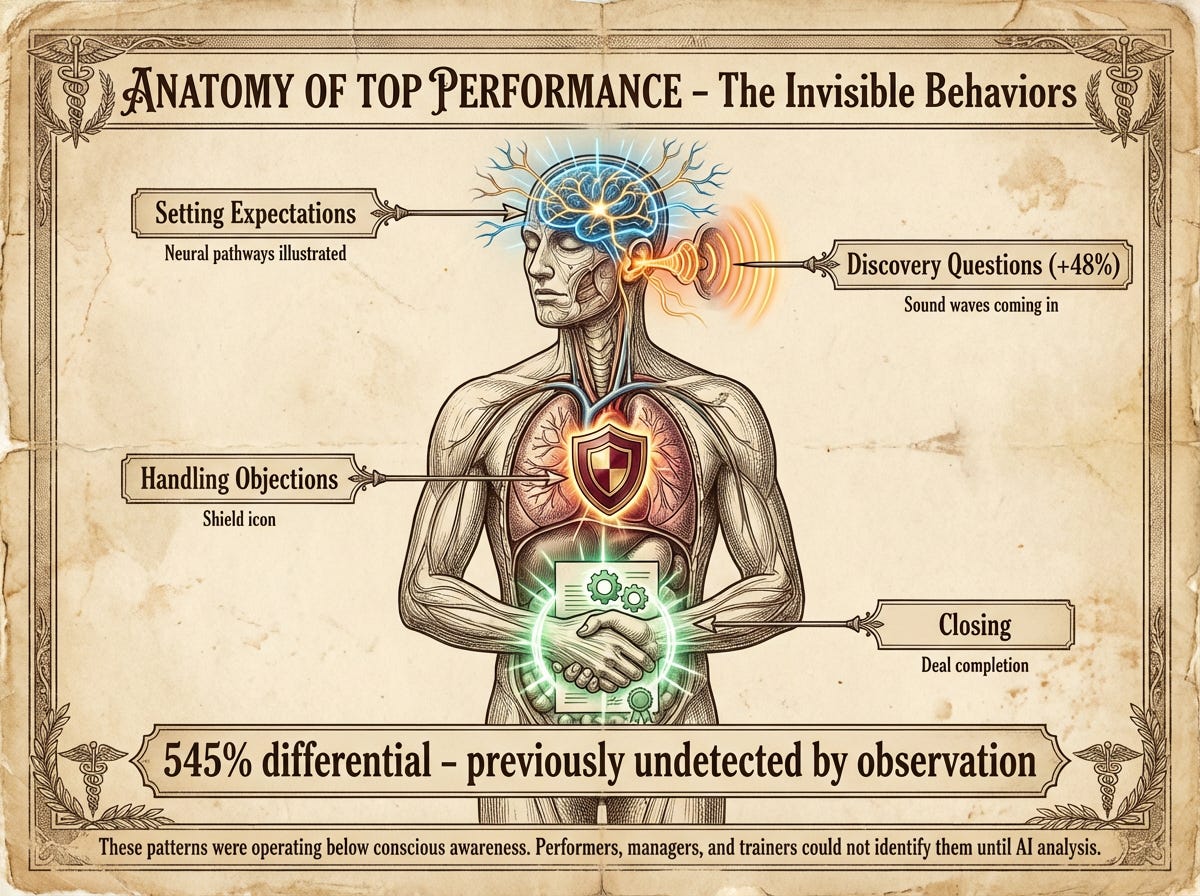

A company wanted to figure out what made their top sales performers different.

These people were closing deals at 2-3x the rate of everyone else. Same product, same market, same scripts. Wildly different results.

They studied 1,000 of them.

First, they asked the top performers to explain their secret.

The performers couldn’t.

They said things like “I just read the room” and “I go with my gut” and “I don’t know, it just works.”

Then they asked managers and observers to identify what made these people different.

45% of organizations had “no defined or visible insight” into what separated top performers from average ones.

Almost half of the companies studying their own best people had no idea what made them the best.

The expertise was invisible to everyone, including the experts themselves.

Then Cresta ran AI analysis on thousands of conversation transcripts.

Not asking people what they did. Watching what they actually did.

What emerged: The “Magic 4” Behaviors.

Top performers asked 48% more discovery questions and were 545% better at four specific micro-behaviors:

Setting expectations

Asking discovery questions

Handling objections

Closing

Not 5% better. Not 50% better. Five hundred and forty-five percent better.

These patterns were operating below conscious awareness.

The performers didn’t know they were doing them.

The managers couldn’t see them.

The trainers couldn’t teach them.

The behaviors were so automatic, so woven into the fabric of how these people worked, that they were completely invisible until AI surfaced them.

This is Polanyi’s Paradox, named after the Nobel laureate who discovered it in 1966:

“We know more than we can tell.”

90% of expertise operates unconsciously.

The more skilled you become, the less you can explain what you’re doing because your knowledge compiles into automatic processes that fire without conscious thought.

Ask an expert how they do what they do, and you’ll get approximately 3,000 versions of “I don’t know, I just see it.”

Years of pattern recognition, compressed into a shrug.

But here’s the thing…AI can detect these patterns in transcripts and behavior, but it cannot transfer them to someone who hasn’t built them through years of repetition.

The Cresta study revealed what the top performers were doing, but it did not suddenly make average performers able to do it.

That’s like showing someone a video of Michael Jordan dunking and expecting them to walk onto the court and do the same thing.

Knowing the pattern exists and having the pattern encoded in your nervous system are two very different things.

What This Actually Means

What happens when everyone has access to the same default answers?

The people without domain expertise produce default content.

And now there’s more default content than ever: 74% of new pages, the average of the internet duplicated at scale, a tsunami of “10 Tips for Better Productivity” and “How to Leverage Synergies” washing over everything.

The experts stand out more now, not less.

A 2025 German study tracking AI’s impact on workers found that “expert workers with deep domain knowledge gain while non-experts often lose.”

The rich get richer.

The people who already knew things now have a force multiplier. The people who were faking it have a magnifying glass pointed at them.

The BCG and Harvard study with 758 consultants found a “jagged technological frontier” where even experts couldn’t always tell which tasks AI could handle and which required human judgment.

The highly skilled workers who thrived were the ones who continued to validate AI outputs and exert what the researchers called “cognitive effort and expert judgment.”

They used AI as a tool without outsourcing their thinking to it.

(There’s a difference between having a sous chef and having someone else eat your dinner.)

So much for democratization.

AI sharpened the contrast between people who actually know things and people who just got better at sounding like they do.

Turns out that’s what happens when everyone gets access to the same default answers.

The Three Paths Forward

If you’re in Group One (experts using AI):

You’re pulling ahead, but the question is whether you can see your own invisible expertise well enough to extract it, package it, and stop being the bottleneck.

Your “Magic 4” behaviors are in there somewhere. You just can’t see them yet because you’re too busy using them.

If you’re in Group Two (experts not using AI):

You can’t keep pace with Group One by staying analog, but your expertise is the asset and AI is just the accelerant.

The years you spent building pattern recognition don’t disappear when you open ChatGPT. They become more valuable as the default content floods in.

Your job is to pour your expertise through the tool.

If you’re in Group Three (AI users without domain expertise):

The tool that was supposed to help you is now the thing that gives you away.

There’s no shortcut to the “except when” knowledge.

There’s only the work, the reps, the years of seeing patterns until they become automatic. AI can speed up some of that learning, but it cannot replace it.

The truth is that you still have to earn the thing that makes the output valuable.

The democratization narrative got the direction wrong.

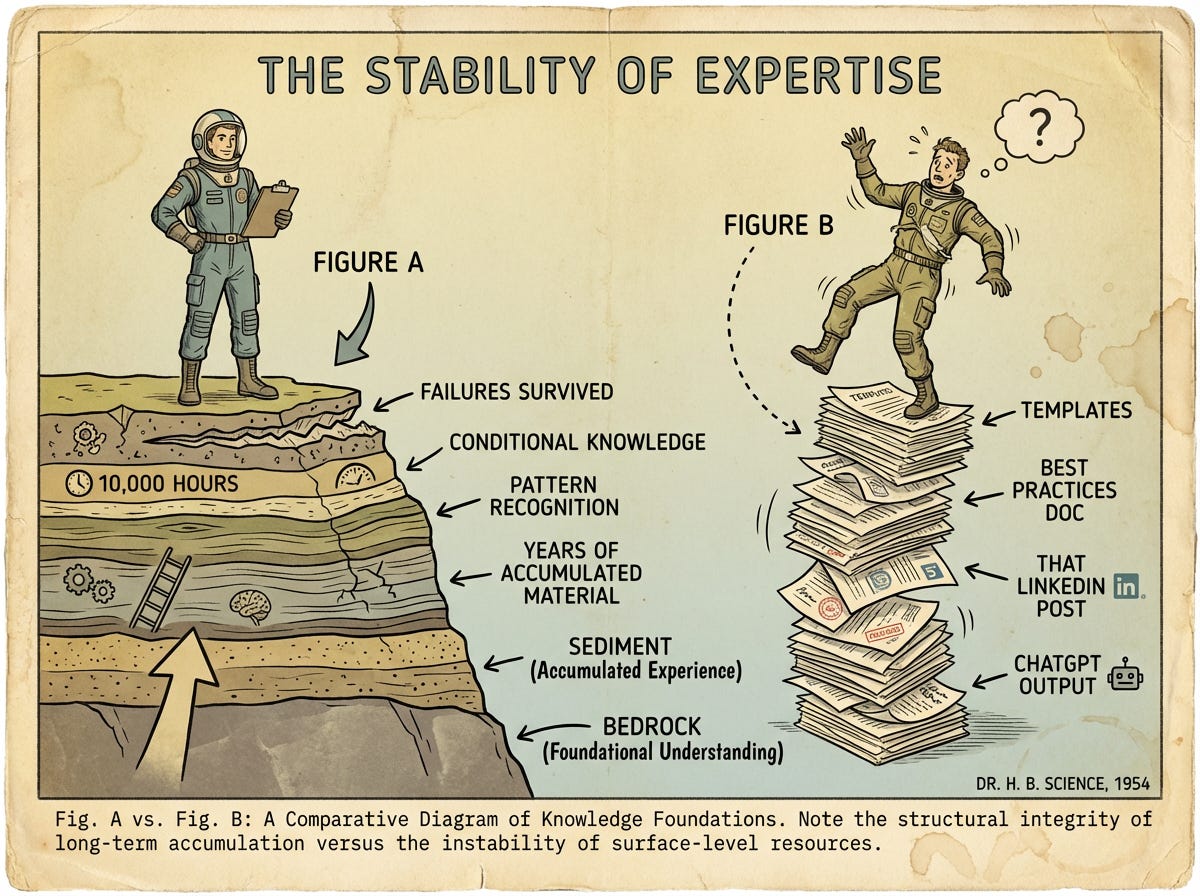

AI revealed who was standing on solid ground and who was standing on a stack of templates.

The Same Problem Shows Up in Your Offer

The three signals don’t just show up in your content…They also show up in your offer.

Your proposal

Your sales page

Your LinkedIn profile

The thing you send when someone asks “what do you do?”

If that sounds like everyone else in your category, you’re competing on price.

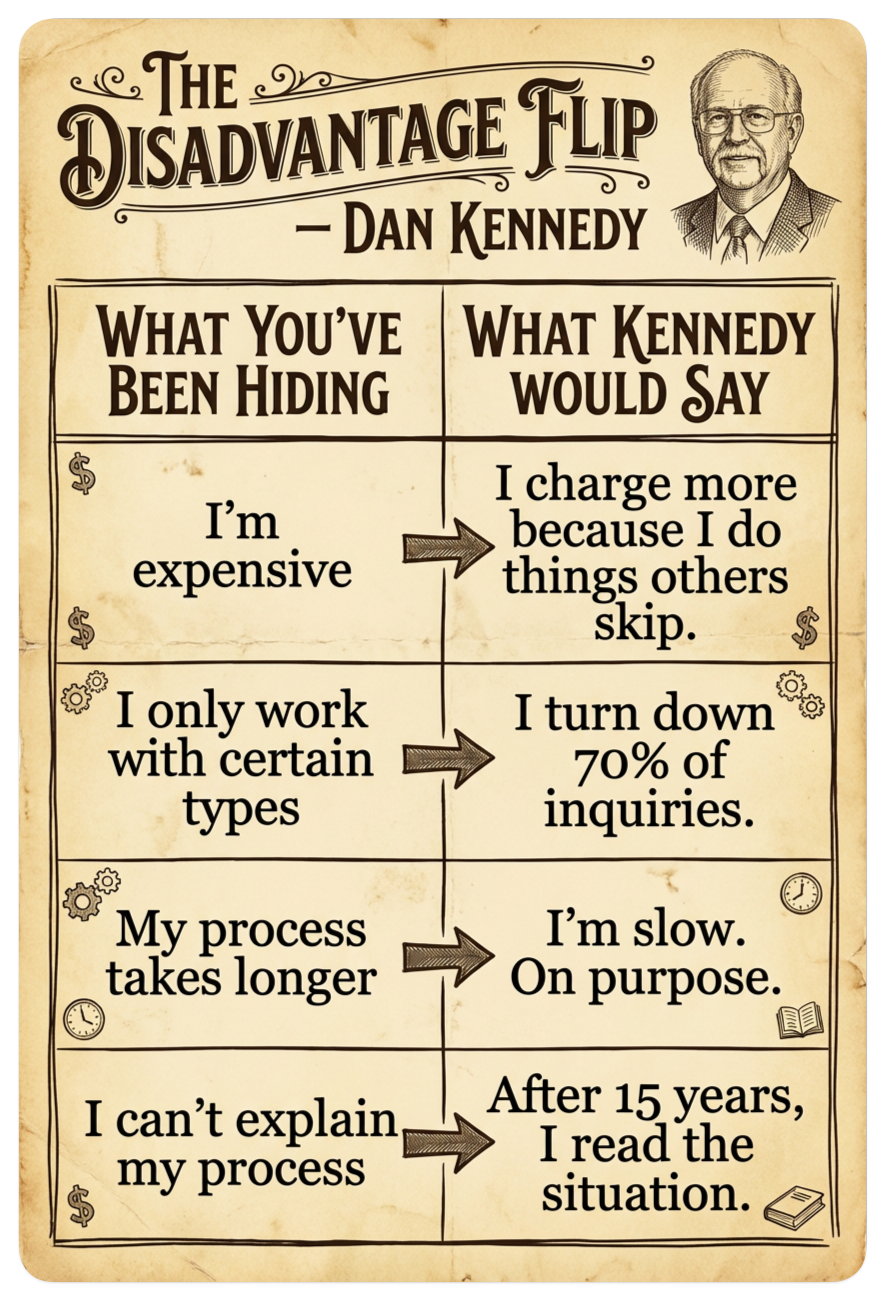

Dan Kennedy built a $100M+ direct response empire asking one question:

“Why should I do business with you versus any and every other option available to me, including doing nothing?”

Most offers fail that test. The expertise is there. It’s just invisible to the person writing the offer.

It surfaces with the right questions.

The Kennedy Interrogation

“Why should I, your perfect prospect, choose to do business with you versus any and every other option available to me, including doing it myself, going with a competitor, or doing nothing at all?”

Kennedy calls himself “The Professor of Harsh Reality.” He doesn’t accept weak answers. Neither should you.

This is a two-part process. First, the interrogation. Then, the rewrite

What You Actually Get

Level 1: The Answers Eight responses about what makes you different. That’s the obvious output.

Level 2: The Patterns Somewhere around question 4 or 5, you’ll notice something. The same theme keeps showing up. The thing you’ve been hiding. The “disadvantage” you apologize for. The opinion you won’t put on your website.

That’s your fingerprint starting to emerge.

Level 3: The Reframe Kennedy’s move is taking what you’ve been apologizing for and turning it into proof you know what you’re doing. “I’m expensive” becomes “You’re paying for mistakes you won’t make.” “I’m slow” becomes “Fast is how you end up redoing it.”

The weakness was the differentiator all along.

Level 4: The Offer That Can’t Be Copied By the end, you’ll have language that only works because of who you specifically are. A competitor can’t steal it because it wouldn’t be true for them.

That’s what makes it defensible.

Part 1: Face the Eight Questions

Paste this prompt into Claude. Answer each question honestly. It will push back when you hedge.

You can stop anytime. Say “done” and it’ll summarize what it learned. But the more questions you answer, the sharper your fingerprint becomes.,,,